OPERATING SYSTEM NOTES

| Site: | meja.gnomio.com |

| Course: | meja.gnomio.com |

| Book: | OPERATING SYSTEM NOTES |

| Printed by: | |

| Date: | Tuesday, 10 June 2025, 4:46 AM |

1. INTRODUCTION TO OPERATING SYSTEM

Introduction to Operating system

An operating system (OS) is system software that manages computer hardware and software resources and provides common services for computer programs. All computer programs, excluding firmware, require an operating system to function.

An operating system is a program that acts as an interface between the user and the computer hardware and controls the execution of all kinds of application programs and assistant system software programs (i.e. Utilities).

Basic importance of the operating system

1) Operating system behaves as a resource manager. It utilizes the computer in a cost effective manner. It keeps account of different jobs and the where about of their results and locations in the memory.

2) Operating system schedules jobs according to their priority passing control from one program to the next. The overall function of job control is especially important when there are several uses (a multi user environment)

3) Operating system makes a communication link user and the system and helps the user to run application programs properly and get the required output

4) Operating system has the ability to fetch the programs in the memory when required and not all the Operating system to be loaded in the memory at the same time, thus giving the user the space to work in the required package more conveniently and easily.

5) Operating system helps the user in file management. Making directories and saving files in them is a very important feature of operating system to organize data according to the needs of the user

6) Multiprogramming is a very important feature of operating system. It schedules and controls the running of several programs at ones

7) It provides program editors that help the user to modify and update the program lines

8) Debugging aids provided by the operating system helps the user to detect and rename errors in programs

9) Disk maintenance ability of operating system checks the validity of data stored on diskettes and other storage to make corrections to erroneous data Operating

1.1. Operating Systems Terminology’s

Here’s a simplified version of your content:

Processes

-

A process is a program that is currently running.

-

It includes the program code, data, and execution status.

-

A program is just a set of instructions, while a process is the program in action.

Files

-

A file is a collection of data stored on a computer with a specific name (filename).

-

Almost everything stored on a computer is in a file.

-

Types of files include:

-

Text files – store written content

-

Program files – store software code

-

Data files, directory files, etc. – store other kinds of data

-

System Calls

-

A system call is how a program asks the operating system to do something.

-

Examples of what system calls do:

-

Run a process

-

Use hardware (like a printer or hard drive)

-

Communicate with the OS kernel

-

Shell and Kernel

-

Shell:

-

A user interface (usually a command line) to interact with the OS.

-

Examples: command.com, sh, bash, csh.

-

-

Kernel:

-

The core of the OS.

-

Manages the system's memory, processes, files, and devices.

-

Loads first when the OS starts and stays protected in memory.

-

Virtual Machines (VMs)

-

A virtual machine is software that acts like a real computer.

-

It can run programs and operating systems as if it were a separate device.

-

Multiple VMs can run on a single physical machine (host).

-

Each VM is called a guest.

1.2. The History of Operating Systems

1st Generation (1940s–mid-1950s) — No Operating System

-

Hardware: Vacuum tubes and punch cards

-

OS Role: Non-existent

-

Programming: Done manually in machine language

-

Execution: One job at a time, manually loaded

-

Examples: ENIAC, UNIVAC

-

Key Characteristics:

-

No batch processing

-

Very limited automation

-

Human operators managed all tasks

-

2nd Generation (mid-1950s–mid-1960s) — Batch Processing Systems

-

Hardware: Transistors, magnetic tapes

-

OS Development: Primitive OS started appearing

-

Job Handling: Batch systems – jobs grouped and processed sequentially

-

Programming: Assembly language, Fortran, COBOL

-

Key Features:

-

Job Control Language (JCL)

-

Offline input/output (I/O) handling

-

No interaction with users during job execution

-

Examples:

-

IBM 7094 with IBSYS

-

General Motors OS (early batch OS)

3rd Generation (mid-1960s–1970s) — Multiprogramming and Time-Sharing

-

Hardware: Integrated Circuits (ICs)

-

Major Leap: Multitasking introduced

-

OS Evolution:

-

Multiprogramming (multiple jobs in memory)

-

Time-sharing (interactive user sessions)

-

-

Key Features:

-

Virtual memory

-

Spooling (Simultaneous Peripheral Operation On-Line)

-

File systems and command-line interfaces

-

Examples:

-

IBM OS/360

-

MULTICS (inspired UNIX)

-

CTSS (Compatible Time-Sharing System)

4th Generation (1970s–1990s) — Personal Computers and Networking

-

Hardware: Microprocessors (Intel 8080, 80286, etc.)

-

OS for PCs: Graphical User Interfaces (GUI) emerge

-

Networking: Local Area Networks (LANs)

-

Key Developments:

-

OS become user-friendly

-

Rise of commercial OS like MS-DOS, Windows

-

UNIX gains popularity for academic and enterprise use

-

Examples:

-

MS-DOS

-

Apple Macintosh System Software

-

Windows 1.0 to 95

-

UNIX (AT&T, BSD variants)

5th Generation (1990s–present) — Distributed, Mobile, and Cloud OS

-

Trends:

-

Mobile computing (smartphones, tablets)

-

Distributed systems and cloud computing

-

Virtualization and containerization (e.g., Docker)

-

-

Key Features:

-

Multicore and parallel processing

-

Real-time and embedded OS

-

Internet-connected devices (IoT)

-

Examples:

-

Windows NT to Windows 11

-

Linux (Ubuntu, Red Hat, Android)

-

macOS, iOS

-

Android OS

-

Cloud OS: Google Fuchsia, ChromeOS

6th Generation (Emerging/Future) — AI-Driven and Quantum OS

-

Possibilities:

-

AI-integrated OS for context-aware systems

-

OS for quantum computing

-

Edge computing and real-time analytics

-

-

Research Areas:

-

Self-healing systems

-

Predictive performance tuning

-

Autonomic computing

-

2. PROCESS MANAGEMENT

A process is defined as an entity which represents the basic unit of work to be implemented in a system.

To put it in simple terms, we write our computer programs in a text file and when we execute this program, it becomes a process which performs all the tasks mentioned in the program.

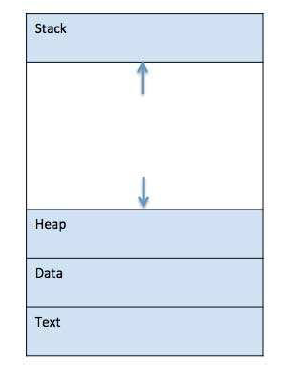

When a program is loaded into the memory and it becomes a process, it can be divided into four sections ─ stack, heap, text and data. The following image shows a simplified layout of a process inside main memory:

1 Stack: The process Stack contains the temporary data such as method/function parameters, return address, and local variables.

2 Heap This is a dynamically allocated memory to a process during its runtime.

3 Text This includes the current activity represented by the value of Program Counter and the contents of the processor's registers.

4 Data This section contains the global and static variables.

2.1. The Process Model

Process Model (Simplified)

What is a Process Model?

-

A process model is a general description of how similar types of processes behave.

-

It serves as a template used to create specific processes during development.

Uses of Process Models:

-

Descriptive – Shows what actually happens during a process.

-

Prescriptive – Explains how a process should be done (rules/guidelines).

-

Explanatory – Gives reasons behind process steps and helps analyze choices.

Process Levels:

-

Organized in a hierarchy (top to bottom).

-

Each level should have clear details (e.g., who performs each step).

Threads (Simplified)

What is a Thread?

-

A thread is a small unit of a process that can run independently.

-

Think of it as a lightweight process.

-

Multiple threads can exist within a single process, sharing memory and resources.

Why Use Threads?

-

Faster and more efficient than creating new processes.

-

Great for parallel tasks (e.g., web servers, background tasks).

-

Require fewer system resources.

🔄 Process vs Thread

| Feature | Process | Thread |

|---|---|---|

| Independence | Independent | Share resources with others |

| Memory | Separate memory space | Shared memory |

| Creation | Costly | Cheap |

| Communication | Needs interprocess communication. | Easy, direct sharing |

Types of Threads

1. User-Level Threads

-

Managed in user space (not visible to OS).

-

Faster, no kernel calls.

-

Limitation: If one thread blocks, all do.

2. Kernel-Level Threads

-

Managed by the OS kernel.

-

Slower, but better for blocking operations.

-

Allows true parallel execution on multiple CPUs.

Multithreading Models

1. Many-to-One

-

Many user threads map to one kernel thread.

-

No parallelism.

-

One blocked thread blocks all.

2. One-to-One

-

Each user thread has a kernel thread.

-

True parallelism, but high overhead.

-

Used in Windows NT/2000.

3. Many-to-Many

-

Many user threads map to many kernel threads.

-

Best of both worlds – flexibility and performance.

-

Used in Solaris.

Context Switching

What is it?

-

Switching the CPU from one process/thread to another.

Steps:

-

Save the current process’s register values.

-

Load the next process’s register values.

-

OS scheduler decides who runs next.

Advantages of Threads

-

Faster context switching

-

Shared memory = easier communication

-

Ideal for apps needing parallel tasks (e.g., game engines, servers)

Disadvantages of Threads

-

No protection between threads

-

Security issues

-

Blocking system calls can affect all threads (if not properly managed)

2.2. Process States Life Cycle

Process States

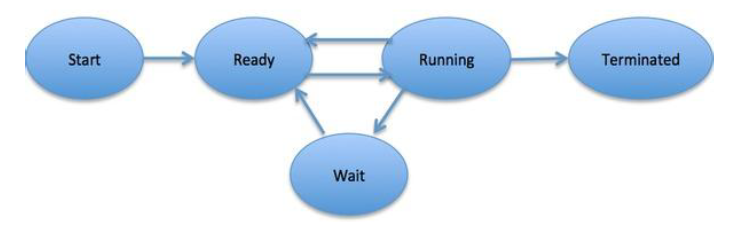

When a process executes, it goes through various states. While names and number of states might slightly vary in different operating systems, here are the five common states:

| No. | State | What It Means |

|---|---|---|

| 1️⃣ | Start | Process is created for the first time. |

| 2️⃣ | Ready | Process is waiting for CPU to be assigned by the OS scheduler. |

| 3️⃣ | Running | Process is currently using the CPU and being executed. |

| 4️⃣ | Waiting | Process is waiting for some resource (e.g., user input, file, device). |

| 5️⃣ | Terminated | Process has finished execution or is killed; it will be removed from memory. |

State Transitions (Example):

-

Start → Ready → Running → Waiting/Ready → Running → Terminated

Process Control Block (PCB)

A PCB is like a file or record that the Operating System keeps to track every process. It stores everything the OS needs to manage and switch between processes.

| No. | Field | What It Stores |

|---|---|---|

| 1️⃣ | Process State | Current state: Ready, Running, Waiting, etc. |

| 2️⃣ | Process Privileges | What the process is allowed to access (e.g., memory, devices). |

| 3️⃣ | Process ID (PID) | A unique number to identify each process. |

| 4️⃣ | Pointer | Link to the parent or related processes. |

| 5️⃣ | Program Counter (PC) | The address of the next instruction to be executed. |

| 6️⃣ | CPU Registers | Data stored in CPU registers (saved during context switching). |

| 7️⃣ | CPU Scheduling Info | Priority, queue pointers, scheduling info. |

| 8️⃣ | Memory Info | Details like page table, memory limits, segment table. |

| 9️⃣ | Accounting Info | CPU time used, process start time, user ID, etc. |

| 🔟 | I/O Status Info | List of I/O devices used, files opened, etc. |

Note:

-

The structure of the PCB depends on the operating system.

-

It’s essential for multitasking because it helps the OS switch between processes.

Process State Diagram

3. MEMORY MANAGEMENT

Memory management is a key function of the Operating System (OS) that controls how main memory (RAM) is used by processes. It decides:

-

Which process gets how much memory

-

When a process gets memory

-

When memory is freed and reused

Key Responsibilities of Memory Management:

| 🔢 | Function | Explanation |

|---|---|---|

| 1️⃣ | Tracks memory usage | Keeps record of each memory block — whether allocated or free. |

| 2️⃣ | Allocates memory | Gives memory to processes when they need it. |

| 3️⃣ | Manages swapping | Moves processes in and out of memory to/from disk during execution. |

| 4️⃣ | Deallocates memory | Frees up memory when a process finishes or no longer needs it. |

| 5️⃣ | Prevents conflicts | Ensures one process doesn't use memory assigned to another (memory protection). |

Why Memory Management Is Important:

-

Ensures efficient use of memory.

-

Supports multitasking by managing multiple processes.

-

Helps avoid crashes or corruption due to memory conflicts.

-

Balances speed vs. space (RAM is fast but limited; disk is slow but large).

3.1. Memory management Concepts

Here's a simplified version of your notes on Process Address Space, ideal for exam prep or quick understanding:

Process Address Space

What Is Process Address Space?

It's the range of memory addresses (logical/virtual) a process can access.

Types of Memory Addresses

| Type | Description | |

|---|---|---|

| 1️⃣ | Symbolic Address | Used in source code (e.g., variable names, labels like x, main:). |

| 2️⃣ | Relative Address | Created by the compiler, relative to the program’s start. |

| 3️⃣ | Physical Address | Final memory address used by hardware. Created when the program is loaded. |

Key Terminology

| Term | Meaning |

|---|---|

| Logical Address | (a.k.a. Virtual Address) – Generated by the CPU during execution. |

| Physical Address | Actual address in main memory (RAM). |

| Logical Address Space | All logical addresses a program can use. |

| Physical Address Space | All physical memory addresses accessible in RAM. |

Address Binding Types

| Type | When Virtual = Physical? | Description |

|---|---|---|

| Compile-time | Yes | If memory location is known at compile-time. |

| Load-time | Yes | If memory location is decided when program is loaded. |

| Execution-time | No | Uses MMU to map virtual → physical at runtime (most common). |

Memory Management Unit

-

A hardware device.

-

Maps virtual addresses (used by processes) to physical addresses (used by RAM).

-

How It Works:

-

Suppose base register =

10000 -

If program accesses address

100, MMU adds base:100 + 10000 = 10100→ This is the physical address used.

-

N/B

-

Virtual addressing allows for memory protection and multitasking.

Static vs Dynamic Loading & Linking

1. Loading

Type Static Loading Dynamic Loading When? Done at compile/load time Done at runtime How? Entire program (including all modules) is loaded into memory before execution starts Only needed modules are loaded when required Speed Faster execution (everything is preloaded) Slower initially (modules loaded on demand) Memory Use Higher – loads everything up front More efficient – loads only what's needed

2. Linking

Type Static Linking Dynamic Linking When? Happens at compile time Happens at runtime How? All code from external libraries/modules is copied into the executable Only a reference to external library is included Examples .exefiles with all code insideWindows: (Dynamic Link Library) Unix: (Shared Object) Executable Size Bigger (includes everything) Smaller (just contains references) Flexibility Less flexible – changes require recompilation More flexible – library updates don’t require recompiling

Key Differences Summary

Feature Static Dynamic Loading time Compile/load time Runtime Linking time Compile time Runtime Speed Faster Slower (initially) Flexibility Low High Memory efficiency Low High

When to Use

-

Static: Use when you want faster performance, no external dependency, or a standalone executable.

-

Dynamic: Use when you want modular code, shared libraries, and easier updates.

-

User programs only see virtual addresses – never actual physical memory.

-

MMU handles all conversions automatically and securely.

-

3.2. Memory allocation technique

Swapping Time Example

-

Swapping is mechanisms in which a process can be swapped temporarily out of main memory (or move) to secondary storage (disk) and make that memory available to other processes. At some later time, the system swaps back the process from the secondary storage to main memory.

Though performance is usually affected by swapping process but it helps in running multiple and big processes in parallel and that's the reason Swapping is also known as a technique for memory compaction.

Memory Allocation

Main Memory Layout

-

Main memory usually has two partitions:

Low Memory -- Operating system resides in this memory.

High Memory -- User processes are held in high memory.

| Type | Description |

|---|---|

| Single-Partition Allocation | Uses relocation + limit register to protect OS & processes. |

| Multiple-Partition Allocation | Memory is split into fixed-sized partitions. Each partition holds one process. |

Fragmentation

| Type | Description |

|---|---|

| External | Enough total memory exists, but not in a contiguous block. |

| Internal | Memory block > process size, leading to unused space inside the block. |

Solutions

-

External → Use Compaction (rearrange memory)

-

Internal → Allocate best-sized block (just big enough)

Contiguous Memory Allocation

Single-Partition

-

OS in low memory, user processes in high memory

-

Use relocation and limit registers for protection

Multiple-Partition

-

Divide memory into fixed partitions

-

Each partition runs one process

-

OS maintains free/used memory table

Allocation Strategies

| Strategy | Description |

|---|---|

| First-fit | First block that fits is allocated |

| Best-fit | Smallest block that fits is allocated |

| Worst-fit | Largest available block is used |

Paging

Paging is a memory management technique used by operating systems to avoid external fragmentation and efficiently manage memory.

Key Points

-

Divides memory into fixed-size blocks:

-

Pages (logical)

-

Frames (physical)

-

-

No external fragmentation

-

Internal fragmentation may occur

Address Translation

In an operating system that uses paging, every time a program accesses memory (for data or instructions), the address it uses is not the real physical address in RAM. Instead, it uses a logical address (also called a virtual address). The operating system and hardware work together to convert this into a physical address that actually points to a location in RAM.

-

Logical Address = Page Number + Offset

-

Physical Address = Frame Number + Offset

-

Uses Page Table to map pages → frames

Advantages & Disadvantages

| Pros | Cons |

|---|---|

| No external fragmentation | Page Table takes space |

| Easy swapping (equal size) | May cause internal fragmentation |

| Efficient implementation | -- |

Segmentation

Key Points

-

Divides process into logical segments (e.g., code, stack, data)

-

Variable size segments (unlike fixed-size pages)

-

Segments loaded into non-contiguous memory, but each segment is contiguous

Address Format

-

Logical Address = Segment Number + Offset

-

Segment Table stores:

-

Base (starting address)

-

Length (size)

-

| Paging | Segmentation |

|---|---|

| Fixed-size pages | Variable-size segments |

| Based on memory | Based on logical divisions in a program |

| Less flexible | More flexible, more natural |